1. Introduction

VPC Peering is a common method for producers to offer service consumption to their users. However, with the use of VPC peering comes many routing complexities like non-transitive VPC peering, large IP address consumption, and over-exposure of resources in a peered VPC.

Private Service Connect (PSC) is a connectivity method that allows producers to expose a service over a single endpoint that a consumer provisions in a workload VPC. This eliminates a lot of issues users face with VPC peering. While many new services are being created with PSC, there are still many services that exist as VPC Peering services.

Google Cloud is excited to introduce a migration path for services that you have created through VPC Peering to move to a PSC based architecture. Using this migration method, the IP address for the producer service that is exposed through VPC peering is preserved through to the PSC based architecture so there are minimal changes required for the consumer. Follow along with this codelab to learn the technical steps for performing this migration.

NOTE: This migration path is only for services where the producer and consumer exist within the same Google Cloud organization. For any Google Cloud services or third party services that use VPC peering, they will leverage a similar migration method, but it will be customized for the service itself. Please reach out to the appropriate parties to inquire about the migration path for these types of services.

What you'll learn

- How to set up a VPC peering based service

- How to set up a PSC based service

- Using the Internal-Ranges API to perform subnet migration over VPC Peering to achieve a VPC Peering to PSC service migration.

- Understanding when downtime needs to occur for service migration

- Migration clean up steps

What you'll need

- Google Cloud Project with Owner permissions

2. Codelab topology

For simplicity, this codelab centralizes all resources in a single project. It will be noted in the codelab what actions need to be performed on the producer side and what actions need to be performed on the consumer side in the event that producers and consumers are in different projects.

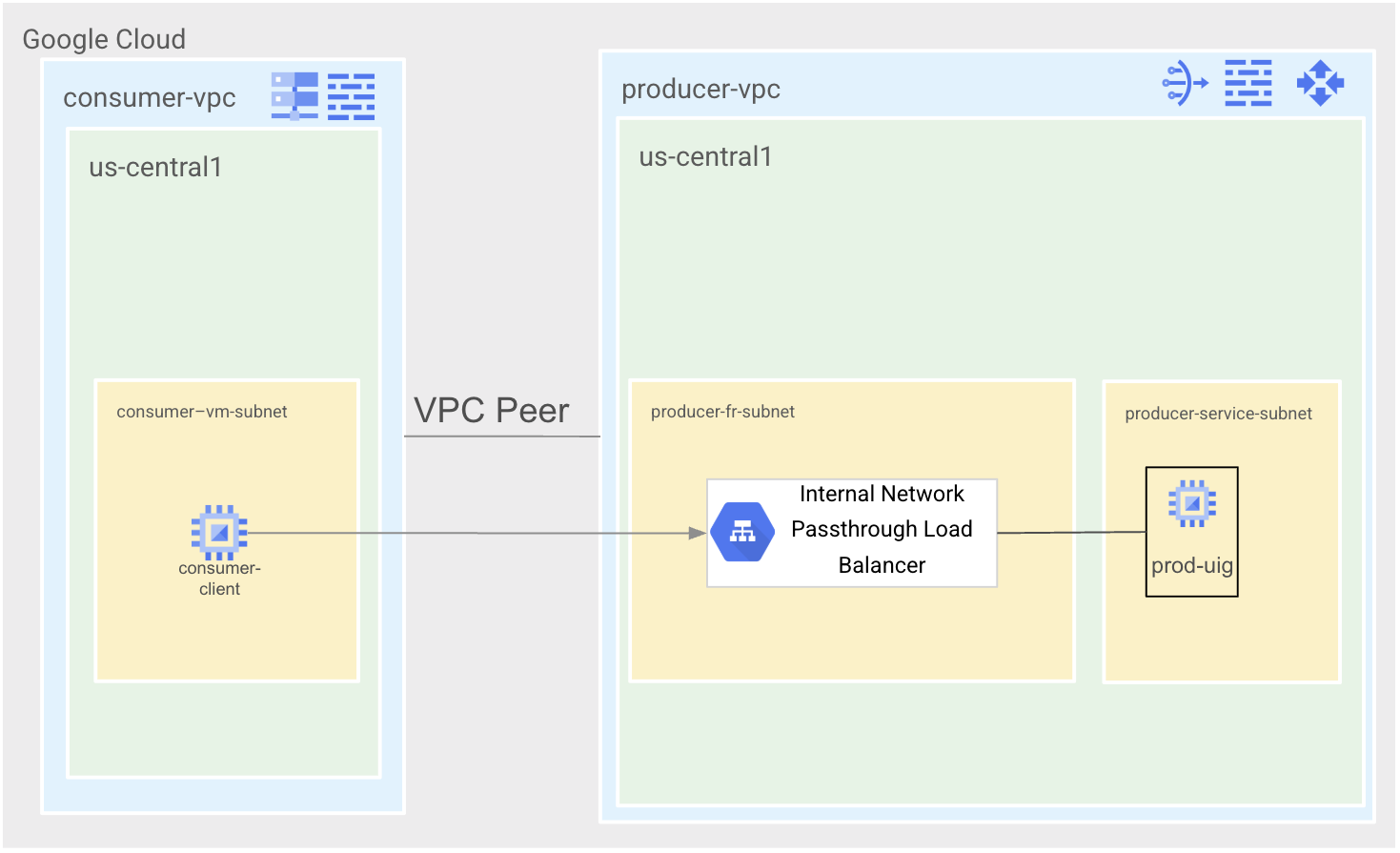

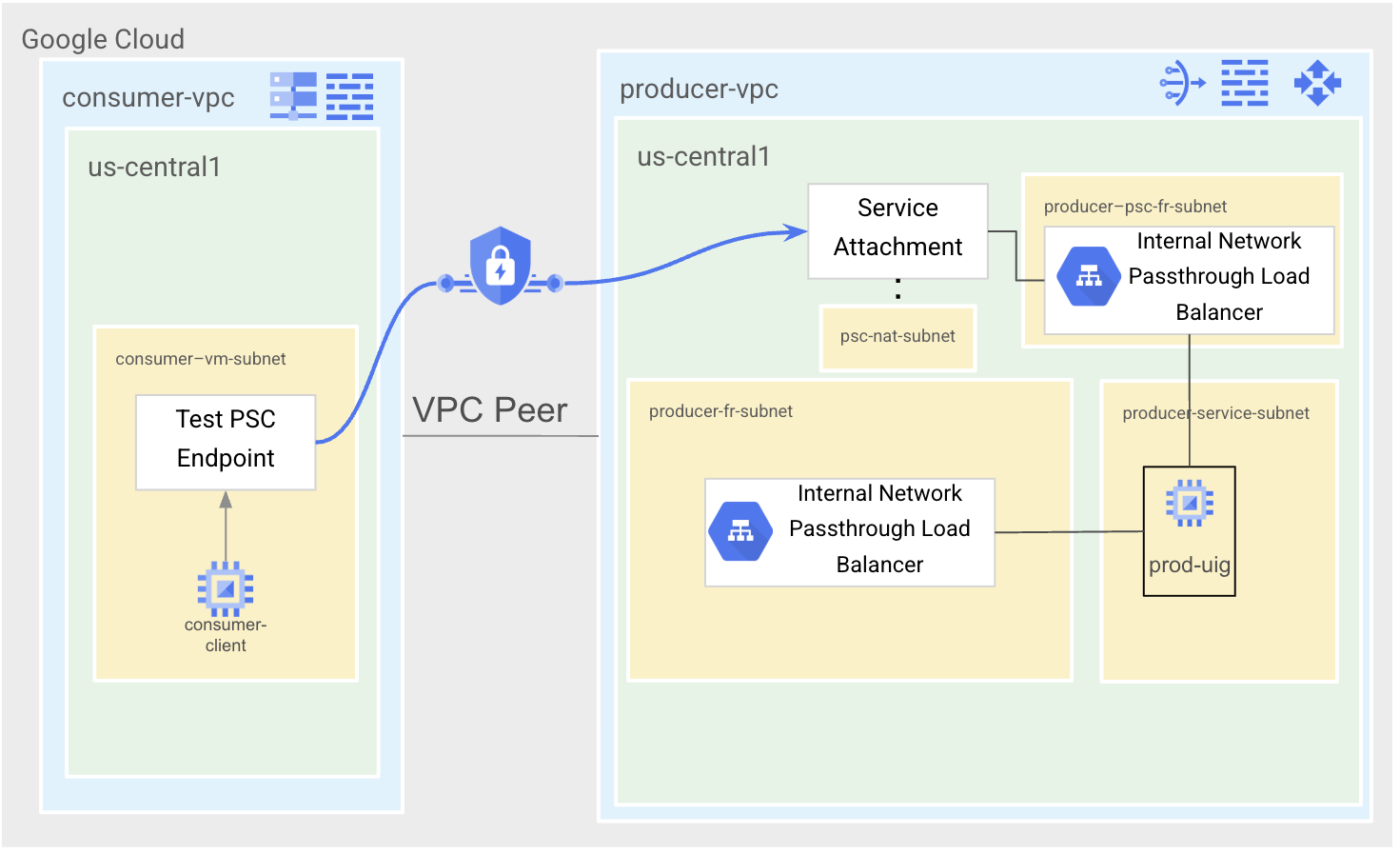

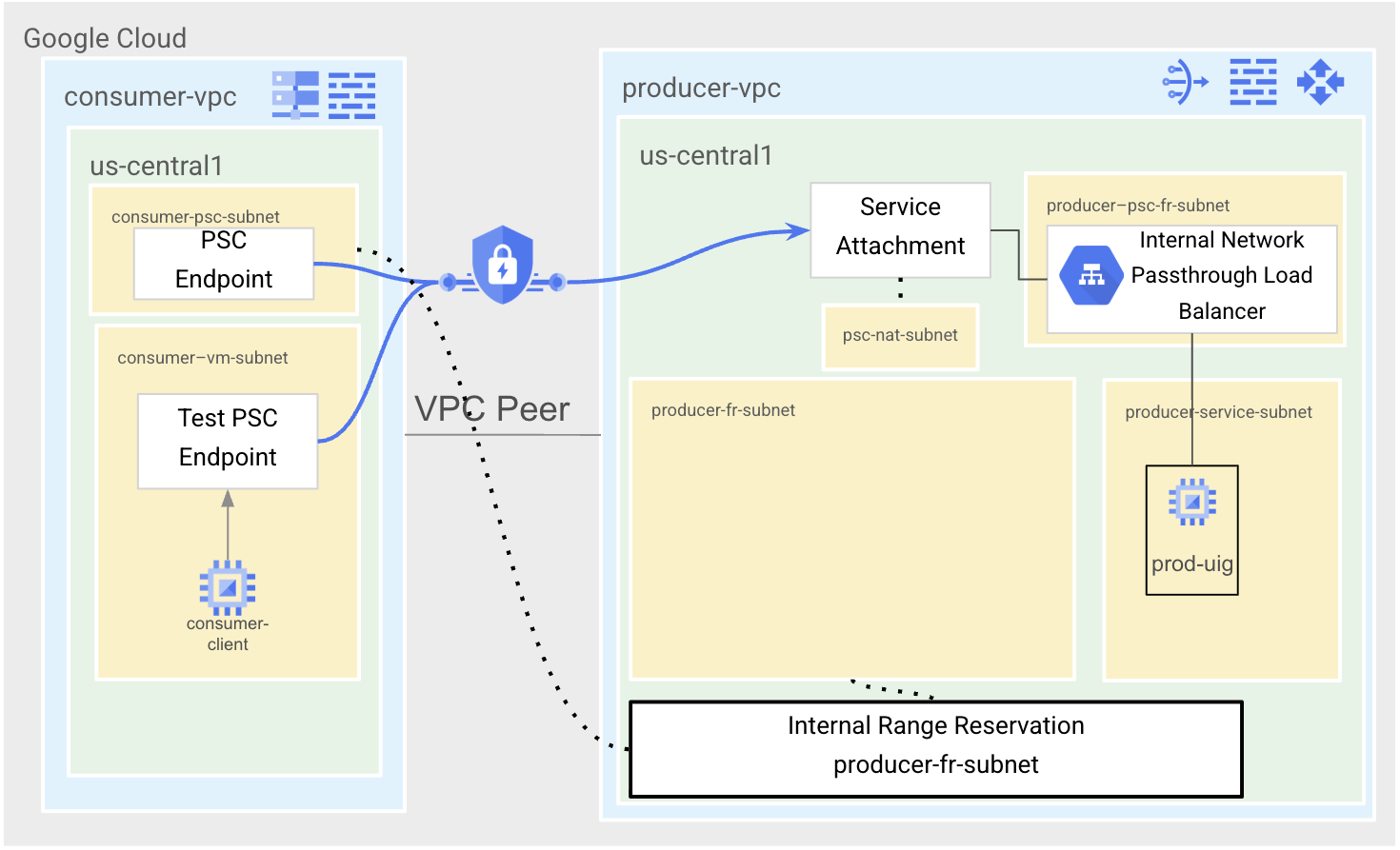

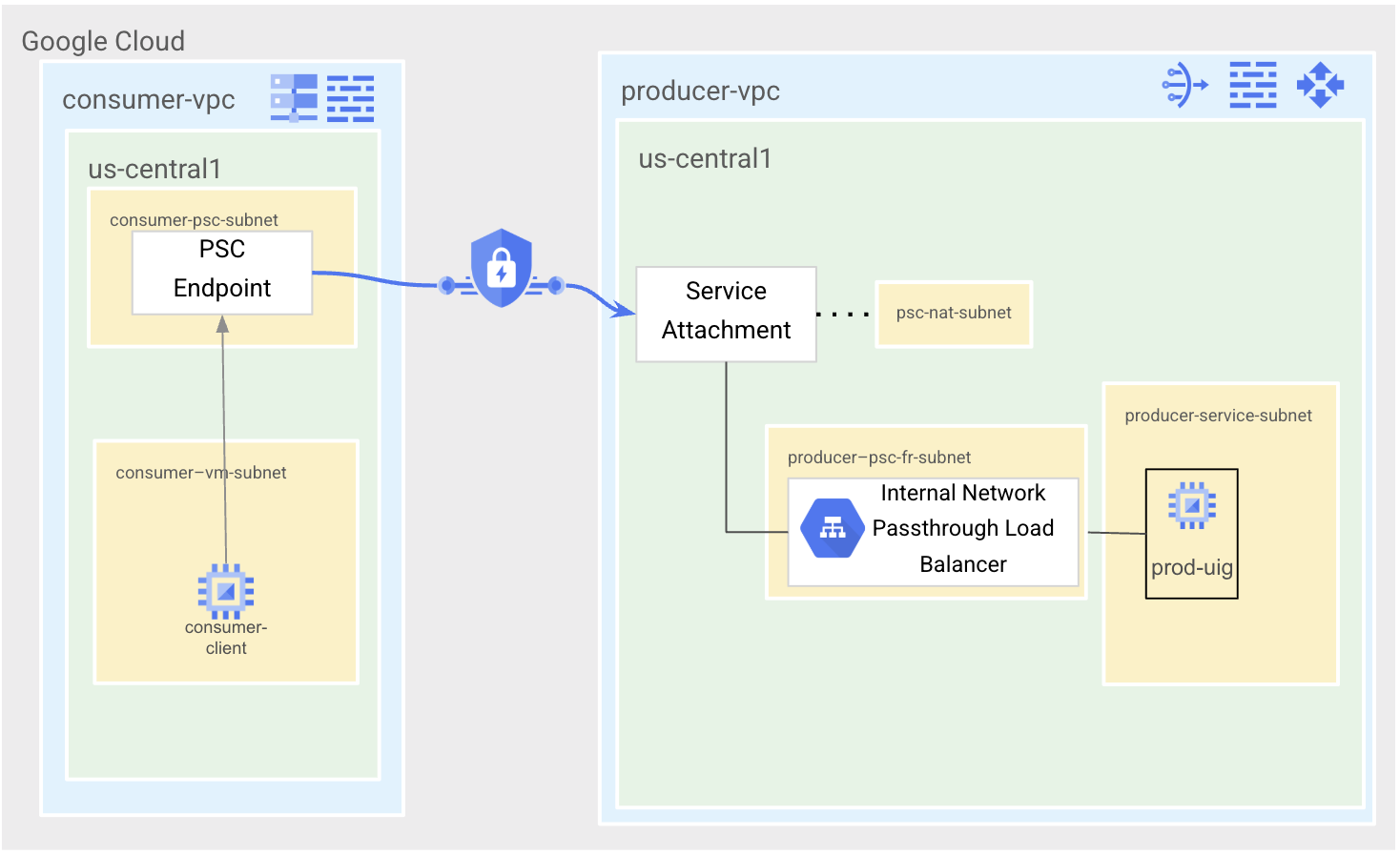

This codelab will have 4 states.

State 1 is the VPC Peering state. There will be two VPCs, consumer-vpc and producer-vpc that will be peered together. Producer-vpc will have a simple Apache service exposed through an internal Network Passthrough Load Balancer. Consumer-vpc will have a single consumer-vm for testing purposes.

State 2 is the PSC test state. We will create a new forwarding rule and use this rule to associate with our Service Attachment. We will then create a test PSC endpoint in consumer-vpc to test that our PSC service is working as expected.

State 3 is the migration state. We will reserve the subnet range in producer-vpc where the VPC Peering based service was deployed to be used in consumer-vpc. We will then create a new PSC endpoint with the same IP address as the pre-existing forwarding rule in producer-vpc.

State 4 is the final PSC state. We will clean up the test PSC endpoint and delete the VPC peering between consumer-vpc and producer-vpc.

3. Setup and Requirements

Self-paced environment setup

- Sign-in to the Google Cloud Console and create a new project or reuse an existing one. If you don't already have a Gmail or Google Workspace account, you must create one.

- The Project name is the display name for this project's participants. It is a character string not used by Google APIs. You can always update it.

- The Project ID is unique across all Google Cloud projects and is immutable (cannot be changed after it has been set). The Cloud Console auto-generates a unique string; usually you don't care what it is. In most codelabs, you'll need to reference your Project ID (typically identified as

PROJECT_ID). If you don't like the generated ID, you might generate another random one. Alternatively, you can try your own, and see if it's available. It can't be changed after this step and remains for the duration of the project. - For your information, there is a third value, a Project Number, which some APIs use. Learn more about all three of these values in the documentation.

- Next, you'll need to enable billing in the Cloud Console to use Cloud resources/APIs. Running through this codelab won't cost much, if anything at all. To shut down resources to avoid incurring billing beyond this tutorial, you can delete the resources you created or delete the project. New Google Cloud users are eligible for the $300 USD Free Trial program.

Start Cloud Shell

While Google Cloud can be operated remotely from your laptop, in this codelab you will be using Google Cloud Shell, a command line environment running in the Cloud.

From the Google Cloud Console, click the Cloud Shell icon on the top right toolbar:

It should only take a few moments to provision and connect to the environment. When it is finished, you should see something like this:

This virtual machine is loaded with all the development tools you'll need. It offers a persistent 5GB home directory, and runs on Google Cloud, greatly enhancing network performance and authentication. All of your work in this codelab can be done within a browser. You do not need to install anything.

4. Before you begin

Enable APIs

Inside Cloud Shell, make sure that your project is set up and configure variables.

gcloud auth login gcloud config list project gcloud config set project [YOUR-PROJECT-ID] export projectid=[YOUR-PROJECT-ID] export region=us-central1 export zone=$region-a echo $projectid echo $region echo $zone

Enable all necessary services

gcloud services enable compute.googleapis.com gcloud services enable networkconnectivity.googleapis.com gcloud services enable dns.googleapis.com

5. Create Producer VPC Network (Producer Activity)

VPC Network

From Cloud Shell

gcloud compute networks create producer-vpc \

--subnet-mode=custom

Create Subnets

From Cloud Shell

gcloud compute networks subnets create producer-service-subnet \

--network=producer-vpc \

--range=10.0.0.0/28 \

--region=$region

gcloud compute networks subnets create producer-fr-subnet \

--network=producer-vpc \

--range=192.168.0.0/28 \

--region=$region

Create Producer Cloud Router and Cloud NAT

From Cloud Shell

gcloud compute routers create $region-cr \

--network=producer-vpc \

--region=$region

gcloud compute routers nats create $region-nat \

--router=$region-cr \

--region=$region \

--nat-all-subnet-ip-ranges \

--auto-allocate-nat-external-ips

Create Producer Network Firewall Policy and Firewall Rules

From Cloud Shell

gcloud compute network-firewall-policies create producer-vpc-policy --global

gcloud compute network-firewall-policies associations create \

--firewall-policy producer-vpc-policy \

--network producer-vpc \

--name network-producer-vpc \

--global-firewall-policy

To allow IAP to connect to your VM instances, create a firewall rule that:

- Applies to all VM instances that you want to be accessible by using IAP.

- Allows ingress traffic from the IP range 35.235.240.0/20. This range contains all IP addresses that IAP uses for TCP forwarding.

From Cloud Shell

gcloud compute network-firewall-policies rules create 1000 \

--action ALLOW \

--firewall-policy producer-vpc-policy \

--description "SSH with IAP" \

--direction INGRESS \

--src-ip-ranges 35.235.240.0/20 \

--layer4-configs tcp:22 \

--global-firewall-policy

We will also create two more rules that allow Load Balancer health checks to the service as well as allowing network traffic from VMs that will connect from consumer-vpc.

From Cloud Shell

gcloud compute network-firewall-policies rules create 2000 \

--action ALLOW \

--firewall-policy producer-vpc-policy \

--description "LB healthchecks" \

--direction INGRESS \

--src-ip-ranges 130.211.0.0/22,35.191.0.0/16 \

--layer4-configs tcp:80 \

--global-firewall-policy

gcloud compute network-firewall-policies rules create 3000 \

--action ALLOW \

--firewall-policy producer-vpc-policy \

--description "allow access from consumer-vpc" \

--direction INGRESS \

--src-ip-ranges 10.0.1.0/28 \

--layer4-configs tcp:80 \

--global-firewall-policy

6. Producer Service Set Up (Producer Activity)

We will create a producer service with a single VM running an Apache web server that will be added to an Unmanaged Instance Group fronted with a Regional Internal Network Passthrough Load Balancer.

Create the VM and Unmanaged Instance Group

From Cloud Shell

gcloud compute instances create producer-service-vm \

--network producer-vpc \

--subnet producer-service-subnet \

--zone $zone \

--no-address \

--metadata startup-script='#! /bin/bash

sudo apt-get update

sudo apt-get install apache2 -y

a2enmod ssl

sudo a2ensite default-ssl

echo "I am a Producer Service." | \

tee /var/www/html/index.html

systemctl restart apache2'

From Cloud Shell

gcloud compute instance-groups unmanaged create prod-uig \ --zone=$zone gcloud compute instance-groups unmanaged add-instances prod-uig \ --zone=$zone \ --instances=producer-service-vm

Create the Regional Internal Network Passthrough Load Balancer

From Cloud Shell

gcloud compute health-checks create http producer-hc \

--region=$region

gcloud compute backend-services create producer-bes \

--load-balancing-scheme=internal \

--protocol=tcp \

--region=$region \

--health-checks=producer-hc \

--health-checks-region=$region

gcloud compute backend-services add-backend producer-bes \

--region=$region \

--instance-group=prod-uig \

--instance-group-zone=$zone

gcloud compute addresses create producer-fr-ip\

--region $region \

--subnet producer-fr-subnet \

--addresses 192.168.0.2

gcloud compute forwarding-rules create producer-fr \

--region=$region \

--load-balancing-scheme=internal \

--network=producer-vpc \

--subnet=producer-fr-subnet \

--address=producer-fr-ip \

--ip-protocol=TCP \

--ports=80 \

--backend-service=producer-bes \

--backend-service-region=$region

7. Create Consumer VPC Network (Consumer Activity)

VPC Network

From Cloud Shell

gcloud compute networks create consumer-vpc \

--subnet-mode=custom

Create Subnet

From Cloud Shell

gcloud compute networks subnets create consumer-vm-subnet \

--network=consumer-vpc \

--range=10.0.1.0/28 \

--region=$region

Create Consumer Network Firewall Policy and Firewall Rules

We'll create another Network Firewall Policy for the consumer-vpc.

From Cloud Shell

gcloud compute network-firewall-policies create consumer-vpc-policy --global

gcloud compute network-firewall-policies associations create \

--firewall-policy consumer-vpc-policy \

--network consumer-vpc \

--name network-consumer-vpc \

--global-firewall-policy

gcloud compute network-firewall-policies rules create 1000 \

--action ALLOW \

--firewall-policy consumer-vpc-policy \

--description "SSH with IAP" \

--direction INGRESS \

--src-ip-ranges 35.235.240.0/20 \

--layer4-configs tcp:22 \

--global-firewall-policy

8. Create VPC Peer

Producer Activity

From Cloud Shell

gcloud compute networks peerings create producer-vpc-peering \

--network=producer-vpc \

--peer-project=$projectid \

--peer-network=consumer-vpc

Consumer Activity

From Cloud Shell

gcloud compute networks peerings create consumer-vpc-peering \

--network=consumer-vpc \

--peer-project=$projectid \

--peer-network=producer-vpc

Confirm the peering is established by checking the list of routes in the consumer-vpc. You should see routes for both consumer-vpc and producer-vpc.

Consumer Activity

From Cloud Shell

gcloud compute routes list --filter="network=consumer-vpc"

Expected Output

NAME: default-route-49dda7094977e231 NETWORK: consumer-vpc DEST_RANGE: 0.0.0.0/0 NEXT_HOP: default-internet-gateway PRIORITY: 1000 NAME: default-route-r-10d65e16cc6278b2 NETWORK: consumer-vpc DEST_RANGE: 10.0.1.0/28 NEXT_HOP: consumer-vpc PRIORITY: 0 NAME: peering-route-496d0732b4f11cea NETWORK: consumer-vpc DEST_RANGE: 192.168.0.0/28 NEXT_HOP: consumer-vpc-peering PRIORITY: 0 NAME: peering-route-b4f9d3acc4c08d55 NETWORK: consumer-vpc DEST_RANGE: 10.0.0.0/28 NEXT_HOP: consumer-vpc-peering PRIORITY: 0

9. Create DNS Zone (Consumer Activity)

We will create a Cloud DNS Private Zone to call the producer service through DNS rather than via a private IP address to showcase a more realistic example.

We will add an A record to the example.com domain pointing service.example.com to the Network Passthrough Load Balancer Forwarding Rule IP address we created previously. That Forwarding Rule IP address is 192.168.0.2.

From Cloud Shell

gcloud dns managed-zones create "producer-service" \ --dns-name=example.com \ --description="producer service dns" \ --visibility=private \ --networks=consumer-vpc gcloud dns record-sets transaction start \ --zone="producer-service" gcloud dns record-sets transaction add 192.168.0.2 \ --name=service.example.com \ --ttl=300 \ --type=A \ --zone="producer-service" gcloud dns record-sets transaction execute \ --zone="producer-service"

10. Test Producer Service Over VPC Peer (Consumer Activity)

At this point, the State 1 architecture has been created.

Create consumer-client VM

From Cloud Shell

gcloud compute instances create consumer-client \ --zone=$zone \ --subnet=consumer-vm-subnet \ --no-address

Test Connectivity

From Cloud Shell

gcloud compute ssh \

--zone "$zone" "consumer-client" \

--tunnel-through-iap \

--project $projectid

From consumer-client VM

curl service.example.com

Expected Output

I am a Producer Service.

From consumer-client VM

exit

11. Prepare Service for Private Service Connect (Producer Activity)

Now that we've finished all the initial set up steps, we will now begin to prepare the VPC-Peered service for migration to Private Service Connect. In this section, we will make changes to the producer-vpc by configuring the service to be exposed via a Service Attachment. We'll need to create a new subnet and a new forwarding rule within that subnet so that we can migrate the existing subnet to the consumer-vpc to keep the existing IP address of the service intact.

Create the subnet where the new load balancer forwarding rule IP will be hosted.

From Cloud Shell

gcloud compute networks subnets create producer-psc-fr-subnet \

--network=producer-vpc \

--range=10.0.2.64/28 \

--region=$region

Create the load balancer forwarding rule internal IP address.

From Cloud Shell

gcloud compute addresses create producer-psc-ip \ --region $region \ --subnet producer-psc-fr-subnet \ --addresses 10.0.2.66

Create the new load balancer forwarding rule. This rule is configured to use the same backend service and health checks that we configured previously.

From Cloud Shell

gcloud compute forwarding-rules create psc-service-fr \ --region=$region \ --load-balancing-scheme=internal \ --network=producer-vpc \ --subnet=producer-psc-fr-subnet \ --address=producer-psc-ip \ --ip-protocol=TCP \ --ports=80 \ --backend-service=producer-bes \ --backend-service-region=$region

The psc-nat-subnet will be associated with the PSC Service Attachment for the purpose of Network Address Translation. For production use cases, this subnet needs to be sized appropriately to support the number of endpoints attached. See PSC NAT subnet sizing documentation for more information.

From Cloud Shell

gcloud compute networks subnets create psc-nat-subnet \

--network=producer-vpc \

--range=10.100.100.0/28 \

--region=$region \

--purpose=PRIVATE_SERVICE_CONNECT

We must add an additional firewall rule to the Network Firewall Policy to now allow traffic from the psc-nat-subnet. When accessing the service through PSC, the psc-nat-subnet is where the traffic will be sourced.

From Cloud Shell

gcloud compute network-firewall-policies rules create 2001 \

--action ALLOW \

--firewall-policy producer-vpc-policy \

--description "allow PSC NAT subnet" \

--direction INGRESS \

--src-ip-ranges 10.100.100.0/28 \

--layer4-configs tcp:80 \

--global-firewall-policy

Create the service attachment and note the service attachment URI to configure the PSC endpoint in the next section.

From Cloud Shell

gcloud compute service-attachments create producer-sa \

--region=$region \

--producer-forwarding-rule=psc-service-fr \

--connection-preference=ACCEPT_MANUAL \

--consumer-accept-list=$projectid=5 \

--nat-subnets=psc-nat-subnet

From Cloud Shell

gcloud compute service-attachments describe producer-sa --region=$region

Sample Output

connectionPreference: ACCEPT_MANUAL consumerAcceptLists: - connectionLimit: 5 projectIdOrNum: $projectid creationTimestamp: '2025-04-24T11:23:09.886-07:00' description: '' enableProxyProtocol: false fingerprint: xxx id: 'xxx' kind: compute#serviceAttachment name: producer-sa natSubnets: - https://www.googleapis.com/compute/v1/projects/$projectid/regions/$region/subnetworks/psc-nat-subnet pscServiceAttachmentId: high: 'xxx' low: 'xxx' reconcileConnections: false region: https://www.googleapis.com/compute/v1/projects/$projectid/regions/$region selfLink: https://www.googleapis.com/compute/v1/projects/$projectid/regions/$region/serviceAttachments/producer-sa targetService: https://www.googleapis.com/compute/v1/projects/$projectid/regions/$region/forwardingRules/psc-service-fr

12. Connect "test" Consumer PSC Endpoint to Producer Service and Test (Consumer Activity)

The architecture is now in State 2.

At this point, the existing producer service exposed over VPC Peering is still live and working properly in a Production scenario. We will create a "test" PSC endpoint to ensure that the exposed Service Attachment is functioning properly before we initiate an outage period to migrate the current VPC Peering subnet to the consumer VPC. Our end state connectivity will be a PSC endpoint with the same IP address as the current forwarding rule for the VPC Peering based service.

Create PSC Endpoint

From Cloud Shell

gcloud compute addresses create test-psc-endpoint-ip \

--region=$region \

--subnet=consumer-vm-subnet \

--addresses 10.0.1.3

The target service below will be the Service Attachment URI that you noted in the last step.

From Cloud Shell

gcloud compute forwarding-rules create test-psc-endpoint \ --region=$region \ --network=consumer-vpc \ --address=test-psc-endpoint-ip \ --target-service-attachment=projects/$projectid/regions/$region/serviceAttachments/producer-sa

Test the "test" PSC Endpoint

From Cloud Shell

gcloud compute ssh \

--zone "$zone" "consumer-client" \

--tunnel-through-iap \

--project $projectid

From consumer-client

curl 10.0.1.3

Expected Output

I am a Producer Service.

From consumer-client

exit

13. Migrate the Existing Producer Forwarding Rule Subnet

Performing these steps will initiate an outage for the live VPC Peering based Producer service. We will now migrate the forwarding rule subnet from the producer-vpc to the consumer-vpc using the Internal Ranges API. This will lock the subnet from being used in the interim period of when we delete the subnet in the producer-vpc and designate it only for migration purposes for creation in the consumer-vpc.

The internal range API requires that you reserve the existing VPC peering forwarding rule subnet (producer-fr-subnet, 192.168.0.0/28) and designate a target subnet name in the consumer-vpc (consumer-psc-subnet). We create a new subnet in the consumer-vpc with this name in a few steps.

Reserve the producer-fr-subnet for migration

Producer Activity

From Cloud Shell

gcloud network-connectivity internal-ranges create producer-peering-internal-range \

--ip-cidr-range=192.168.0.0/28 \

--network=producer-vpc \

--usage=FOR_MIGRATION \

--migration-source=projects/$projectid/regions/$region/subnetworks/producer-fr-subnet \

--migration-target=projects/$projectid/regions/$region/subnetworks/consumer-psc-subnet

Run a describe on the internal-range that we created to view the state of the subnet.

Producer Activity

From Cloud Shell

gcloud network-connectivity internal-ranges describe producer-peering-internal-range

Sample Output

createTime: '2025-04-24T19:26:10.589343291Z' ipCidrRange: 192.168.0.0/28 migration: source: projects/$projectid/regions/$region/subnetworks/producer-fr-subnet target: projects/$projectid/regions/$region/subnetworks/consumer-psc-subnet name: projects/$projectid/locations/global/internalRanges/producer-peering-internal-range network: https://www.googleapis.com/compute/v1/projects/$project/global/networks/producer-vpc peering: FOR_SELF updateTime: '2025-04-24T19:26:11.521960016Z' usage: FOR_MIGRATION

Delete the VPC Peering based Forwarding Rule and Subnet

Producer Activity

From Cloud Shell

gcloud compute forwarding-rules delete producer-fr --region=$region gcloud compute addresses delete producer-fr-ip --region=$region gcloud compute networks subnets delete producer-fr-subnet --region=$region

Migrate the Subnet

Migrate the subnet to the consumer-vpc by creating a new subnet using the internal-range we created earlier. The name of this subnet must be the same name we targeted earlier (consumer-psc-subnet). The specific purpose of PEER_MIGRATION notes the subnet is reserved for subnet migration between peered VPCs. With this purpose flag, this subnet can only contain reserved static IP addresses and PSC endpoints.

Consumer Activity

From Cloud Shell

gcloud compute networks subnets create consumer-psc-subnet \ --purpose=PEER_MIGRATION \ --network=consumer-vpc \ --range=192.168.0.0/28 \ --region=$region

14. Create the End State PSC Endpoint (Consumer Activity)

At this point, the Producer service is still down. The subnet that we just created is still locked and can only be used for the specific purpose of migration. You can test this by attempting to create a VM in this subnet. The VM creation will fail.

From Cloud Shell

gcloud compute instances create test-consumer-vm \

--zone=$zone \

--subnet=consumer-psc-subnet \

--no-address

Expected Output

ERROR: (gcloud.compute.instances.create) Could not fetch resource: - Subnetwork must have purpose=PRIVATE.

We can only use this subnet to create a PSC endpoint. Note that the IP address we create uses the same IP as the forwarding rule that our producer service used over the VPC Peer.

From Cloud Shell

gcloud compute addresses create psc-endpoint-ip \

--region=$region \

--subnet=consumer-psc-subnet \

--addresses 192.168.0.2

Once again, you must use the same Service Attachment URI that you noted earlier and that was also used to create the "test" PSC Endpoint.

From Cloud Shell

gcloud compute forwarding-rules create psc-endpoint \

--region=$region \

--network=consumer-vpc \

--address=psc-endpoint-ip \

--target-service-attachment=projects/$projectid/regions/$region/serviceAttachments/producer-sa

15. Test the End State PSC Endpoint (Consumer Activity)

At this point, you are at the State 3 architecture.

From Cloud Shell

gcloud compute ssh \

--zone "$zone" "consumer-client" \

--tunnel-through-iap \

--project $projectid

From consumer-client VM

curl service.example.com

Expected Output

I am a Producer Service.

From consumer-client VM

exit

At this point, the outage has ended and the service is live again. Note that we did not have to make any changes to the existing DNS. No consumer-side client changes need to be made. Applications can just resume operations to the migrated service.

16. Migration Clean-up

To finalize the migration, there are a few clean up steps we need to perform. We must delete and unlock resources.

Unlock the Internal Range subnet

This will unlock the migrated subnet so that its purpose can be changed from "PEER_MIGRATION" to "PRIVATE."

Producer Activity

From Cloud Shell

gcloud network-connectivity internal-ranges delete producer-peering-internal-range

Consumer Activity

From Cloud Shell

gcloud compute networks subnets update consumer-psc-subnet \

--region=$region \

--purpose=PRIVATE

gcloud compute networks subnets describe consumer-psc-subnet --region=$region

Sample Output

creationTimestamp: '2025-04-24T12:29:33.883-07:00' fingerprint: xxx gatewayAddress: 192.168.0.1 id: 'xxx' ipCidrRange: 192.168.0.0/28 kind: compute#subnetwork name: consumer-psc-subnet network: https://www.googleapis.com/compute/v1/projects/$projectid/global/networks/consumer-vpc privateIpGoogleAccess: false privateIpv6GoogleAccess: DISABLE_GOOGLE_ACCESS purpose: PRIVATE region: https://www.googleapis.com/compute/v1/projects/$projectid/regions/$region selfLink: https://www.googleapis.com/compute/v1/projects/$projectid/regions/$region/subnetworks/consumer-psc-subnet

Delete the VPC Peers

Producer Activity

From Cloud Shell

gcloud compute networks peerings delete producer-vpc-peering \

--network=producer-vpc

Consumer Activity

From Cloud Shell

gcloud compute networks peerings delete consumer-vpc-peering \

--network=consumer-vpc

Delete the "test" PSC Endpoint

Consumer-Activity

From Cloud Shell

gcloud compute forwarding-rules delete test-psc-endpoint --region=$region gcloud compute addresses delete test-psc-endpoint-ip --region=$region

17. Final Test After the Migration Clean Up (Consumer Activity)

At this point, the State 4 architecture (the final state) has been achieved.

Test the PSC Endpoint connectivity again to make sure no adverse effects are observed from the migration clean up.

From Cloud Shell

gcloud compute ssh \

--zone "$zone" "consumer-client" \

--tunnel-through-iap \

--project $projectid

From consumer-client VM

curl service.example.com

Expected Output

I am a Producer Service.

From consumer-client VM

exit

SUCCESS!

18. Cleanup steps

From Cloud Shell

gcloud compute forwarding-rules delete psc-endpoint --region=$region -q gcloud compute addresses delete psc-endpoint-ip --region=$region -q gcloud compute instances delete consumer-client --zone=$zone --project=$projectid -q gcloud dns record-sets delete service.example.com --zone="producer-service" --type=A -q gcloud dns managed-zones delete "producer-service" -q gcloud compute network-firewall-policies rules delete 1000 --firewall-policy consumer-vpc-policy --global-firewall-policy -q gcloud compute network-firewall-policies associations delete --firewall-policy=consumer-vpc-policy --name=network-consumer-vpc --global-firewall-policy -q gcloud compute network-firewall-policies delete consumer-vpc-policy --global -q gcloud compute networks subnets delete consumer-psc-subnet --region=$region -q gcloud compute networks subnets delete consumer-vm-subnet --region=$region -q gcloud compute networks delete consumer-vpc -q gcloud compute service-attachments delete producer-sa --region=$region -q gcloud compute forwarding-rules delete psc-service-fr --region=$region -q gcloud compute addresses delete producer-psc-ip --region=$region -q gcloud compute backend-services delete producer-bes --region=$region -q gcloud compute health-checks delete producer-hc --region=$region -q gcloud compute instance-groups unmanaged delete prod-uig --zone=$zone -q gcloud compute instances delete producer-service-vm --zone=$zone --project=$projectid -q gcloud compute network-firewall-policies rules delete 3000 --firewall-policy producer-vpc-policy --global-firewall-policy -q gcloud compute network-firewall-policies rules delete 2001 --firewall-policy producer-vpc-policy --global-firewall-policy -q gcloud compute network-firewall-policies rules delete 2000 --firewall-policy producer-vpc-policy --global-firewall-policy -q gcloud compute network-firewall-policies rules delete 1000 --firewall-policy producer-vpc-policy --global-firewall-policy -q gcloud compute network-firewall-policies associations delete --firewall-policy=producer-vpc-policy --name=network-producer-vpc --global-firewall-policy -q gcloud compute network-firewall-policies delete producer-vpc-policy --global -q gcloud compute routers nats delete $region-nat --router=$region-cr --region=$region -q gcloud compute routers delete $region-cr --region=$region -q gcloud compute networks subnets delete psc-nat-subnet --region=$region -q gcloud compute networks subnets delete producer-psc-fr-subnet --region=$region -q gcloud compute networks subnets delete producer-service-subnet --region=$region -q gcloud compute networks delete producer-vpc -q

19. Congratulations!

Congratulations for completing the codelab.

What we've covered

- How to set up a VPC peering based service

- How to set up a PSC based service

- Using the Internal-Ranges API to perform subnet migration over VPC Peering to achieve a VPC Peering to PSC service migration.

- Understanding when downtime needs to occur for service migration

- Migration clean up steps